4. Create Data Refiner

Now we will define a Refiner for your DataDAO's data. This is a job that will turn each data contribution into a queryable libSQL database, pinned on IPFS, that the Vana Protocol can use. Like Proof-of-Contribution jobs, refiners run in secure compute environments.

- Fork the Data Refinement Template

- Fork the template repository in GitHub here.

- Open the Actions tab for your fork and enable GitHub Actions (if prompted).

- In a local terminal, download your fork, replacing YOUR_GITHUB_USERNAME:

git clone https://github.com/YOUR_GITHUB_USERNAME/vana-data-refinement-template.git

cd vana-data-refinement-template- Get your

REFINEMENT_ENCRYPTION_KEY- Navigate to the dlpPubKeys(dlpId) method on Vanascan.

- Input your

dlpIdthat you saved in 2. Register DataDAO , and copy the returned key for your.env. - If the result is an empty string

"", wait a few minutes and try again. If you still get an empty string after 30 minutes, please open a support ticket in the Vana Builders Discord.

- Get your

PINATA_API_KEYandPINATA_API_SECRET- Go to pinata.cloud and log in.

- In the sidebar, click "API Keys"

- Click "New Key"

- Turn on the Admin toggle, give it a name (e.g.

"test"), and click Create - Copy the generated

API KeyandSecretfor your.env

- Create the

.env

# Get this key from chain (see above)

REFINEMENT_ENCRYPTION_KEY=0x...

# Optional: IPFS pinning for local test (see above)

PINATA_API_KEY=your_key

PINATA_API_SECRET=your_secret

TipThese

.envparameters are only used for local testing — in production, the actual values will be injected automatically when the Contributor UI calls Satya via/refinemethod.You're doing this now to generate and preview the schema your Refiner produces — this is what data consumers will later use to query your DataDAO’s data.

-

Update Schema Metadata

Before running the refinement test, make a quick edit to your schema’s metadata — this is required to trigger the GitHub pipeline.

- Open

refiner/config.py - Edit

SCHEMA_NAMEto something like:"Google Drive Analytics of QuickstartDAO"

- Generate Your Schema

docker build -t refiner .

docker run \

--rm \

-v $(pwd)/input:/input \

-v $(pwd)/output:/output \

--env-file .env \

refinerThis will produce two key files in output/:

db.libsql.pgp: the input data refined into a database, encrypted and pinned to IPFSschema.json: table structure for queries

- Upload Your Schema to IPFS

Now we need to make your schema.json accessible to public.

- Go to pinata.cloud and log in

- In the sidebar, click "Files" → "Add" → "File Upload"

- Upload the

schema.jsonfrom your local/outputdirectory - After the upload completes, click on the file and copy it’s URL

You’ll use this in a future step as the schemaDefinitionUrl when registering your Refiner.

- Commit Changes

git add .

git commit -m "Set up refiner for the DataDAO"

git push origin mainThen, go to your GitHub repo → Actions tab, and you’ll see how the build pipeline automatically builds your refiner and compresses it into a .tar.gz artifact.

- Get the Artifact URL

Once the GitHub Action completes:

- Navigate to your repo’s Releases section

- You’ll find a

.tar.gzfile — this is your compiled PoC image - Copy the public URL on this file — you’ll need it for the next step as

refinementInstructionUrl. Save it for later too, you'll need it in the Contributor UI.

- Register the Refiner Onchain

Once registered, your schema becomes part of the Vana data access layer, enabling apps to query refined data with your approval.

- Navigate to the addRefiner method on Vanascan.

- Fill in the fields:

dlpId: yourdlpId, saved from 1. Deploy Smart Contracts (no need to convert from wei with×10^18).name: a label for your Refiner, e.g."Quickstart Refiner".schemaDefinitionUrl: the IPFS URL of your schema from step (5) above.refinementInstructionUrl: the URL to your.tar.gzrefiner file from step (7) above.

- Connect your wallet (same as

OWNER_ADDRESS) - Submit the transaction

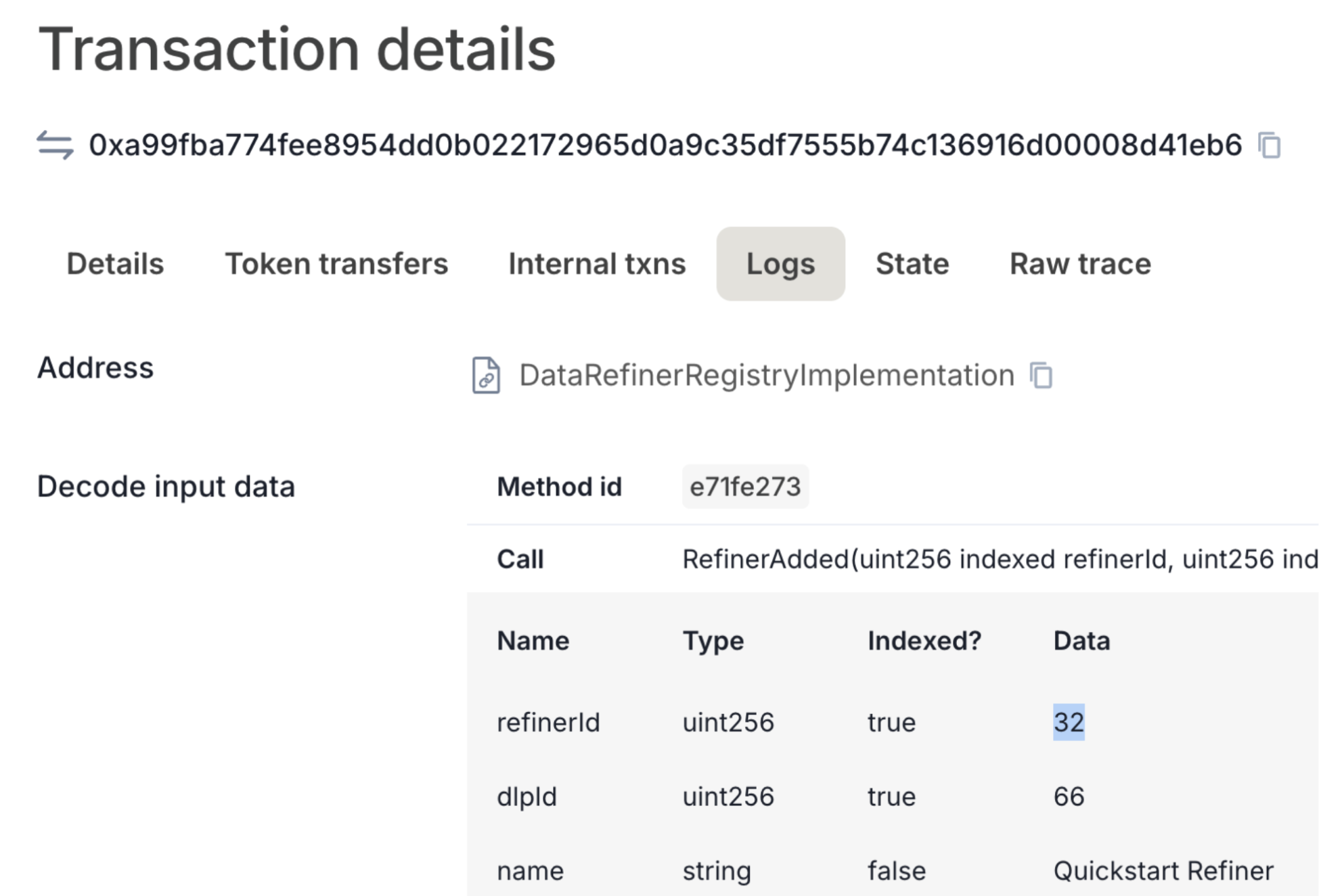

- Get Your Refiner ID

After submitting the addRefiner transaction:

- Open your transaction on Vanascan

- Go to the Logs tab

- Find the

RefinerAddedevent and save yourrefinerIdfor the next section.

At this point, you’ve got everything needed to make your data query-ready on the Vana network.

Updated 3 months ago