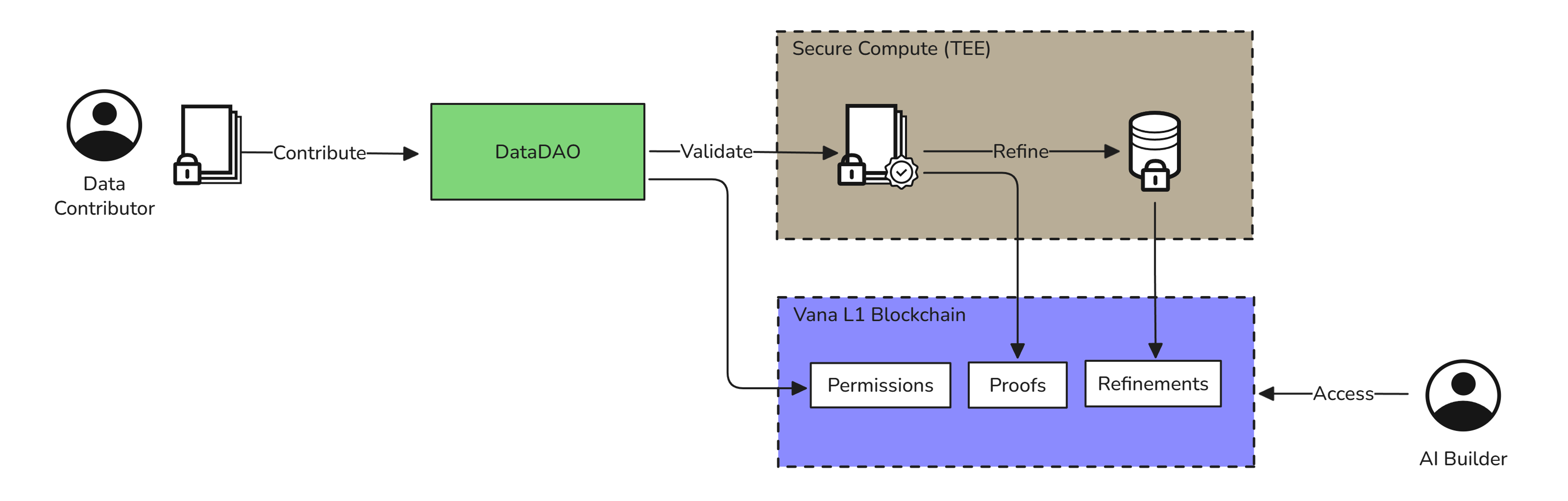

Data Lifecycle Overview

Technical flow from contribution to AI training

Users contribute encrypted data to DataDAOs, validators verify authenticity and assign scores, refinement jobs transform raw data into queryable formats, and AI builders access datasets through secure compute environments.

Builder Roles

- DataDAO builders define validation and refinement logic for specific data types, creating ways for data contributors to bring data onto the network

- AI application builders access refined datasets for training and inference, made available by dataDAOs

- Infrastructure builders extend the validator network and tooling. For example, adding a data preprocessing method that works across datasets, or a vector embedding to search across datasets.

Phase 1: Contribution

Users export data from platforms and prepare it for DataDAO submission.

Technical flow:

- User encrypts data client-side with their wallet-controlled private key to maintain ownership

- User uploads encrypted data to their own storage for personal control

- User then encrypts with the DataDAO's public key to enable processing

- DataDAO-accessible encrypted data goes to IPFS for validator processing

- User submits contribution transaction to the Data Liquidity Pool (DLP) contract

The dual encryption model ensures users retain individual control over their data while enabling collective coordination through the DataDAO.

Phase 2: Validation

Data validators verify contribution authenticity using DataDAO-specific proof-of-contribution. This phase determines data quality and token rewards.

Technical flow:

- TEEPool contract (validator coordination system) triggers validation job

- Data validator retrieves encrypted data and proof-of-contribution

- Proof-of-contribution script executes in secure environment, checking format and authenticity, and assigning a score to the data

- Validator generates cryptographic attestation of results

- DataRegistry contract (validation record system) records attestation onchain

- Data contributor earns VRC-20 tokens based on proof-of-contribution scores

Phase 3: Refinement

Validated data is processed into structured, queryable formats optimized for AI applications. This transforms raw user data into training-ready datasets.

Technical flow:

- Refinement job triggered on data validators

- Builder-defined refinement scripts transform raw data into structured formats

- Structured data stored in decentralized storage

- DataRefinerRegistry contract (refinement metadata system) records schemas and processing details

Example transformation:

Raw fitness tracker data (JSON files with timestamps, activities, measurements) becomes structured tables with normalized columns, standardized units, and queryable relationships - enabling AI models to efficiently train on consistent data formats.

Phase 4: Access

AI builders access refined datasets through structured queries and secure computation.

Technical flow:

- Query Engine: Builders define SQL queries against structured datasets to retrieve specific data subsets. They can write cross-dataDAO queries to access different kinds of data, joined by wallet

- Compute Engine: Builders run AI training or inference jobs on the data returned from Query Engine operations

- Both steps run in secure data validators with DataDAO permission enforcement

Example flow:

- Builder queries:

SELECT user_age, daily_steps FROM fitness_data WHERE activity_level = 'high' - Query Engine returns structured dataset of high-activity users

- Builder runs training job on returned data to build fitness recommendation model

All access requires burning VANA tokens (for protocol costs) plus VRC-20 tokens (for dataset access), with value flowing back to contributors based on their ownership stakes.

Token Economics

VANA: Required for all protocol interactions, validator payments, and job execution

VRC-20: Dataset-specific tokens earned through contribution, required for access, used for DataDAO governance

Value flows: Contributors earn tokens through validation → Builders burn tokens for access → Economic value returns to contributors proportionally.

Updated 4 months ago